Let the Regression To The Mean Do Its Thing.

A reflection on how AI is changing the way we think, and what we risk when “looks right” replaces “is right".

I don’t always know what drives people to strive for excellence.

Some are moved by ambition, others by fear of irrelevance, and a few simply by a sense of responsibility, to the craft, to the outcome, to the truth.

Whatever the motivation, the desire to do something well, and to do it with integrity, is getting harder to hold onto.

Especially in the age of AI.

In statistics, regression to the mean describes how extremes tend to settle back into the average over time. In work, in culture, in technology…it happens too. When we stop questioning, we drift toward the average, even if we started out aiming higher.

The Disappearance of Discipline

We’ve entered a phase where speed and scale are treated as default virtues, and fluency is mistaken for intelligence. And while AI has undeniably expanded what’s possible, it also chipped away at something deeper: the discipline of thinking critically, of asking hard questions, of verifying before trusting.

We don’t debug ideas anymore; we prompt, skim the output, and move on.

“Trust but verify” isn’t fading; it’s being actively deprecated.

There’s a new culture forming around these tools, one that quietly encourages disengagement from the fundamentals.

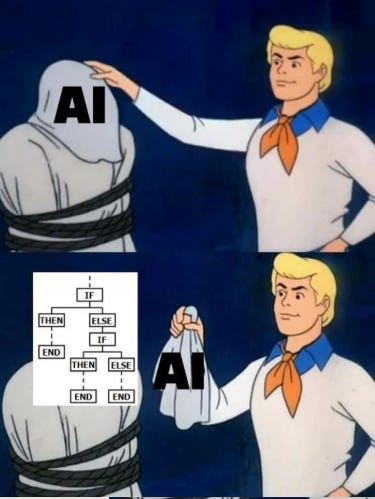

The further you zoom out from the code, the more acceptable it becomes to ignore what’s underneath. AI is applied data science, still.

It’s machine learning, statistics, labeling, optimization. It’s methods and trade-offs. But we’re treating it like a vending machine. Put in a question, get a usable answer, move on. We no longer ask if the model should be doing what it’s doing, just whether it can. And as long as the result “looks right,” nobody really checks if it is right.

This is a point Yejin Choi has made often: we’ve become so obsessed with scaling intelligence that we’ve stopped asking what kind of intelligence we actually want. The largest models aren’t necessarily the most useful ones. Sometimes, they just produce more confident noise, statistical fluency masquerading as insight.

But nuance rarely scales. And in most workflows, scale wins by default.

Some have called this the 'reliability gap'. The space between how persuasive a model sounds and how trustworthy it actually is. It’s a concept thinkers like

have warned about for years... and the danger lies in how easily that fluency is mistaken for accuracy.And, it’s not just the hallucinations. We’ve talked about hallucinations before, and there’s a lot of room for critical exploration there.

But the deeper problem is that we keep believing the outputs anyway, because they let us move on.

And moving on is SO seductive. It feels like momentum. Like clarity. Like closure.

It relieves us from the discomfort of ambiguity, from the friction of not knowing. It tells us the hard part is over, even if we never actually faced it.

And in high-velocity environments, that relief becomes a currency.

We start optimizing for it. Celebrating it. Even mistaking it for good judgment.

But that gap, the one between what feels right and what is right, widens every time we accept “close enough” as the finish line.

Every time we treat coherence as correctness, we teach the system, and ourselves, that depth is optional.

The Drift Toward Average

And this is the kind of drift that doesn’t announce itself. It creeps in through cultural shifts, through processes that prioritize pace over precision, through tools that generate confidence without evidence, through environments where thoughtful skepticism feels out of place.

We’ve replaced rigorous thinking with the appearance of rigor.

A system built not to encourage doubt, but to reward velocity.

We’re caught somewhere between our tendency to trust confidence over truth, and Goodhart’s Law: when a measure becomes a target, it ceases to be a good measure.

And in systems where velocity becomes the target, quality quietly falls away. We start optimizing for output instead of outcomes. Fluency replaces depth. Progress becomes performance.

The real risk isn’t AI replacing humans. It’s us forgetting how to challenge assumptions, because we’ve outsourced the discomfort of uncertainty to a model that never admits it doesn’t know.

We used to have a culture, at least in pockets, that valued asking questions that slowed things down. That saw pressure-testing a method as a sign of care, not obstruction.

But now, that kind of scrutiny is seen as political. Distracting. A problem to manage, not a principle to protect.

We’ve reached the point where even “just checking” becomes performative, a polite nod to diligence rather than a serious act of verification. The phrase “can you sanity-check this real quick?” rarely means check for sanity. It means don’t block the momentum.

What we collectively call AI didn’t create this dynamic.

It just revealed how thin our guardrails were in the first place.

It exposed how ready we were to offload judgment, how eager we are to accept good-enough answers if they help us feel productive, how allergic we’ve become to uncertainty.

We’ve built tools that reflect our best ambitions and our worst habits, and they’re reshaping the way we think, work, and justify outcomes.

Not everything can be saved.

Not every process deserves to be fixed.

Not every shortcut needs to be debated.

Sometimes, the most principled thing you can do is step back, watch the regression to the mean unfold, and choose not to be part of it.

Don’t worry. The meaningful work is still there. It’s slower. It’s harder. It requires showing up with standards in a room where shortcuts are the default.

But it’s worth doing.

So, what part of your work are you still willing to slow down for?

Until next time,

x

Juliana

This: https://open.substack.com/pub/julianajackson/p/ai-regression-to-the-mean?r=3igs1&selection=5b328cd3-e099-4851-8f13-34a2916909bb&utm_campaign=post-share-selection&utm_medium=web