Surveillance Capitalism in AI. ChatGPT indexed chats? A feature! not a bug./s

Is ChatGPT monetizing our not-so-private conversations?

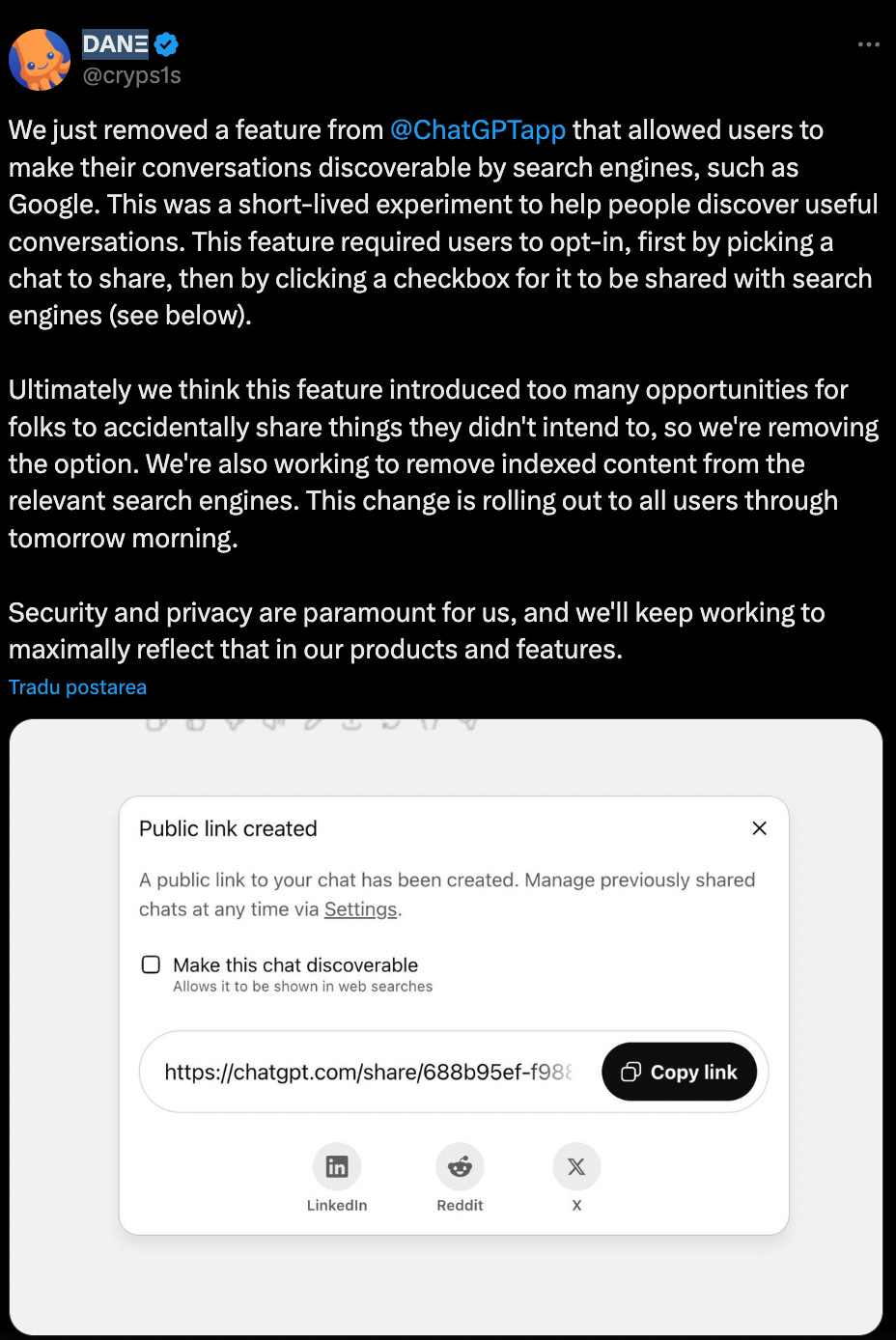

UPDATE: Just in!

Since I hit send, OpenAI removed the searchable conversations feature and wiped all indexed content from search engines. No more ChatGPT conversations are discoverable on Google.

They admitted the feature "introduced too many opportunities for folks to accidentally share things they didn't intend to", which is corporate speak for "our UI was designed to trick people into making private conversations public."

The fact that they acted quickly doesn't change the core issue: they shipped a feature that prioritized data extraction over user privacy, used confusing UI to maximize opt-ins, and only reversed course when people noticed. The default assumption was still "your conversations should be public unless you're savvy enough to opt out."

Quick one from me this week. So, you've likely seen (or used) ChatGPT's "share link" feature. It’s presented as a convenient tool, a simple way to collaborate or show off an interesting AI interaction. But what if that "share" button is actually a gateway to making your deepest thoughts, private struggles, or even sensitive business discussions available to the entire internet?

Well that just happened today. And instead of more of us being furious, scared and justifiably worried, a part of the internet decided that this a huge opportunity for “growth”. Huge opportunity for targeting these poor folks that woke up this morning with all their life available for the internet to see.

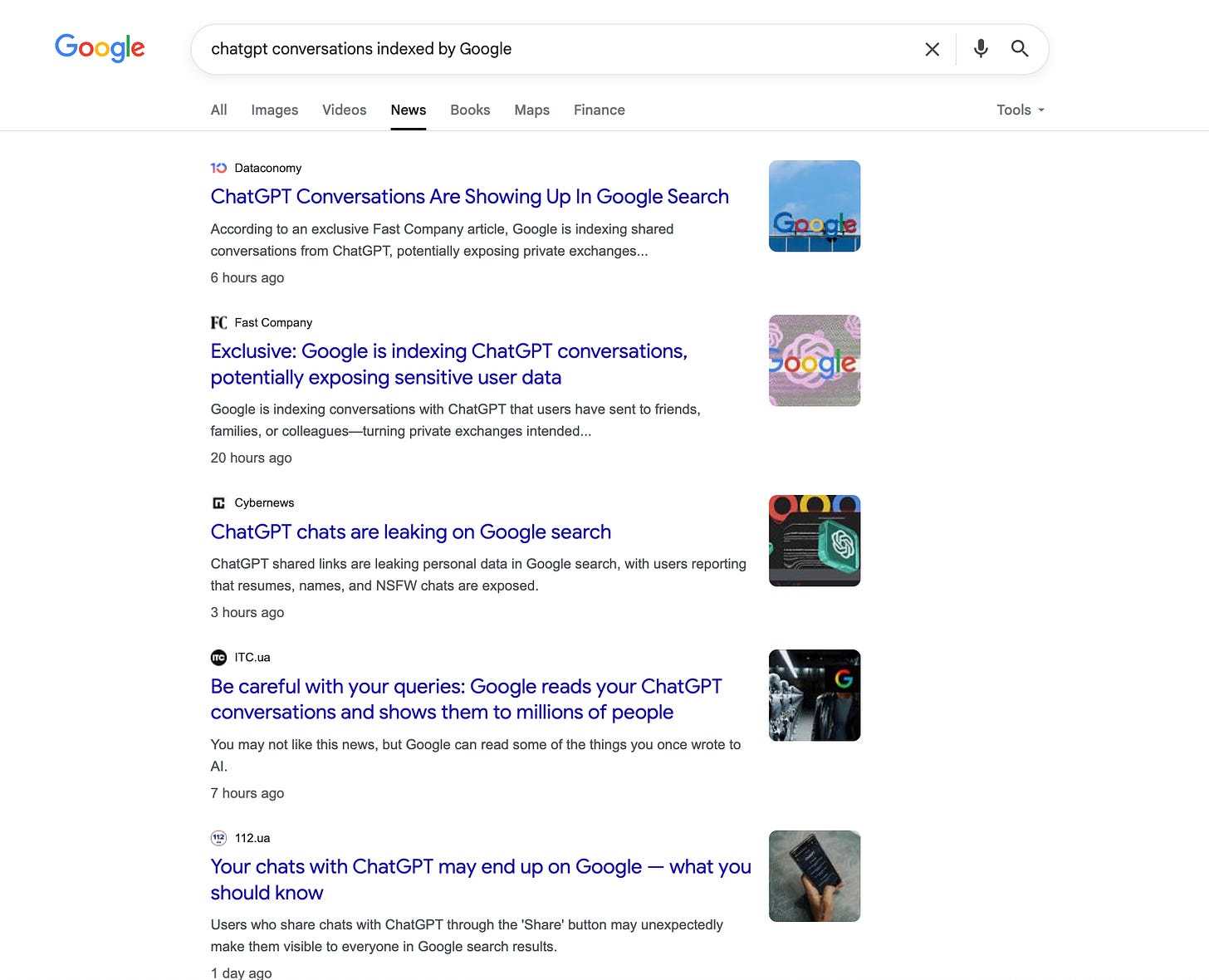

Since yesterday evening, reports, social posts and news articles are flooding in, talking about how shared ChatGPT conversations ended up indexed by Google. Surprise surprise.

This means your private data, personal identifiable data, things you'd never intentionally publish is now searchable by anyone, IF you have a published shared chat with ChatGPT.

Now, there are 2 sides of this conversation to have. One, OpenAI says in their documentation that if you don’t toggle the “Make this Chat Discoverable” option in their settings interface, it won’t end up indexed by search engines, like Google.

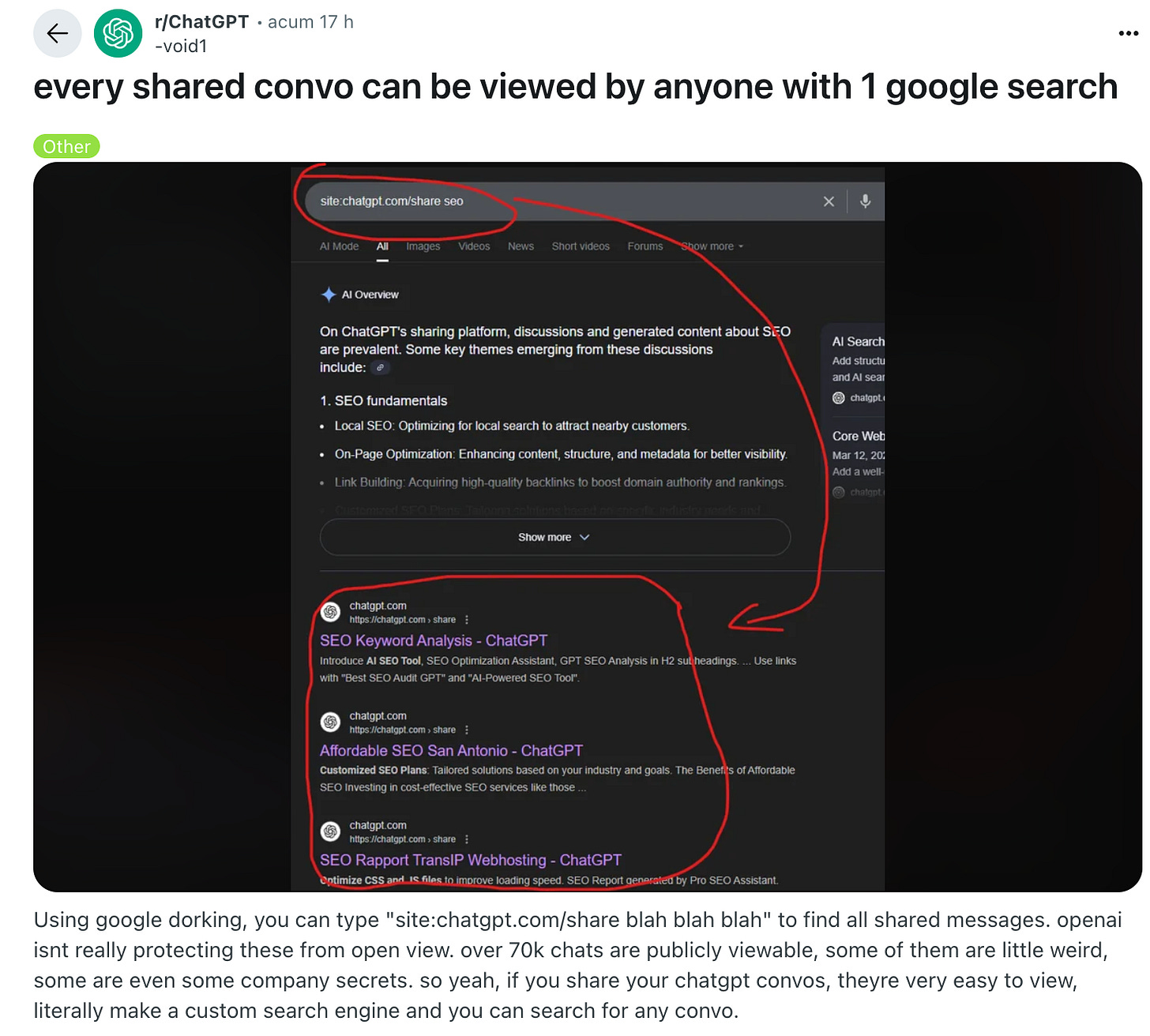

SURE, but, the other side is, from my limited SEO knowledge (and please SEO folks reading this, correct me if I got this wrong) as long as you have published a link on the internet, you can be pretty much free game.

If a shared ChatGPT link exists and is placed anywhere on the public web (someone tweets it, posts it on a forum, links to it from a blog), a Google crawler is likely to find it. Or if your intended recipient shared it with someone else… kinda the same thing.

I researched today to understand this better, before sharing with you all. (PS: thanks Adrian, Slobodan and Jason for sanity checking me).

This is my understanding:

robots.txtandnoindex:robots.txttells crawlers what not to crawl.The

noindexmeta tag or HTTP header tells crawlers not to index a page, even if they crawl it.

The catch is that for

noindexto be effective, Googlebot must crawl the page and see that directive. If a page is linked widely before thenoindexis applied, or if thenoindexis somehow bypassed (Google's quality algorithms deem it important enough to index anyway, or the page is referenced in ways that circumvent the directive), it can still appear.

So, if the hypothesis (because, I truthfully don’t know) is that OpenAI isn't adding a solid noindex tag to shared links unless you specifically opt for indexing, then what's happening?

Their

noindeximplementation might not be fully effective?Links are being shared in places that Google does crawl and index, and perhaps Google is overriding the

noindex?The "Make this conversation discoverable" adds an

indexdirective, but perhaps the "no indexing by default" implementation isn't as good as it should be, or there are other ways these links become discoverable.And ofc, if a user does toggle the "make conversation discoverable" option, then they're explicitly giving permission for Google to crawl and index it, making it fully public.

SEO folks, let me know in the comments or hit reply to this email if you found out wha’ts really going on.

Also, OpenAI's FAQ states: "No, shared links are not enabled to show up in public search results on the internet by default. You can manually enable the link to be indexed by search engines when sharing." (Source: https://help.openai.com/en/articles/7925741-chatgpt-shared-links-faq)

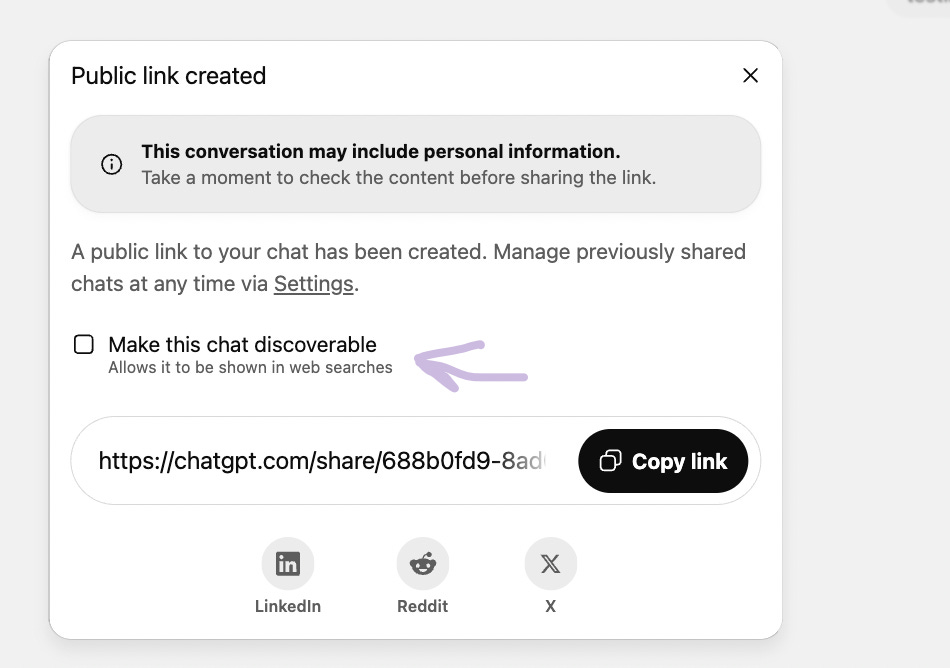

WELL, yes but also, NO. Let’s take a look at the user experience here.

Is the messaging clear? Not at all.

the primary action label "Make this chat discoverable" is vague and misleading

critical information is buried in secondary text that users typically ignore

technical jargon ("web searches") assumes knowledge most users lack

there is no clear explanation of permanence or scope of exposure

Are users giving informed consent for their conversations to be shared on the internet? Consent maybe, informed, not at all.

users cannot make an informed decision without understanding the full implications

the interface obscures rather than clarifies the actual consequences

the warning placement and styling suggests boilerplate rather than critical information

there is no mention of permanence, deletion limitations, or global accessibility

Is the information in this window following correct information architecture practices? Absolutely not.

most critical information (permanent public indexing) has the lowest visual priority

this benign sounding action gets primary visual weight

the warning feels disconnected from the specific choice being made

Is this experience providing the right level of accessibility and inclusion? No way in hell.

it assumes technical literacy around web indexing, search engines, and data permanence

there is no accommodation for users with varying levels of digital fluency

also, language barriers not considered (technical terms may not translate conceptually in other languages)

Is it transparent? does it build trust with the users? Ha. Nope.

the interface actively conceals rather than reveals true consequences

it’s designed to maximize opt ins rather than informed decisions (just like consent banners baby)

a truly privacy focused design would make it very difficult for sensitive content to become public unintentionally.

And finally, does it prioritize user control? Wouldn’t that be cute.

so, while technically providing a choice, the framing manipulates that choice. period.

there is no clear path to understand or reverse consequences.

DARK PATTERNS GALORE. And since I’ve found out that these Dark Patterns have funky names, here is what is going on here from that POV.

Trick Wording. Meaning using "discoverable" instead of "publicly searchable forever" fundamentally misrepresents what the user is agreeing to.

Privacy Zuckering (named after Facebook's practices, and my favorite). Getting users to share more personal information than they intended by obscuring the real scope and permanence of that sharing.

Bait and Switch perhaps? Meaning the user thinks they're making content accessible to their intended recipient, but they're actually publishing it to the entire internet.

Roach Motel. Means it’s easy to get in (one click to share publicly), but the fine print reveals you can't easily get out (shared links persist even after deletion).

Also another pattern shared by my friend and industry pillar, Craig Sullivan, is ambiguity. Some users may be confused and assume that discoverability relates to “their” findability of their chat. They might assume that “discoverability” is related to searching or having access, so may select this thinking it's something else.

These dark patterns and UX issues are not new for asking for consent, this is very much similar to the cookie consent banners we've all become depressingly familiar with across the web.

Think of the "Accept All" as a bright, prominent button while burying "Manage Preferences" in smaller, grayed out text that leads to deliberately confusing menus with dozens of pre-selected tracking categories and legitimate interests :)

The same practices are at play here too, making the privacy violating option appear helpful and frictionless while obscuring the true scope of what you're agreeing to behind technical jargon and buried fine print.

But as with other many things, we also normalized this deceptive approach to consent so much that it's now being deployed in contexts far more intimate than website tracking, aka our private conversations with AI systems that many people sadly treat as digital confidants. And I do not judge them for it, many people in the world are alone, have no one to talk to, and they do not know better, than just using these models to mimic some sort of normality.

So, the UX playbook for tricking users into surveillance has been perfected throughout the years and now it's being applied to extract and publicize our most unguarded thoughts and creative processes. And…

This, my dear internet friends is a perfect example of Surveillance Capitalism at work.

According to Harvard Business School professor Shoshana Zuboff, surveillance capitalism is:

"an economic order that claims human experience as free raw material for hidden commercial practices of extraction, prediction, and sales."

It works by transforming our private human experience into "behavioral data," which is then used to predict and modify our future behavior for profit.

(Source: Harvard Gazette interview with Shoshana Zuboff)

If we take this concept to what happened today with ChatGPT, although there are so many examples of this at play, we have our free raw material, meaning our conversations, often intimate or proprietary. Then there are the hidden commercial practices of extraction, the "share" button, with its ambiguous "Make this conversation discoverable" toggle, acting as a subtle mechanism for this extraction. It looks like a neutral feature, but it funnels private data into publicly indexable formats.

The we are trying to normalize this “exposure” by allowing such a toggle to exist without prominent, clear, and repeated warnings. The system normalizes the idea that sharing a link (even with a limited audience in mind) can inherently lead to public exposure and subsequent data collection. It shifts the burden of privacy entirely onto the unsuspecting user, blaming them when their "private" thoughts are exposed. Which is what half of the internet is doing right now, as I am writing this rant article.

This is CONSISTENT to many comments I got today on my own LinkedIn posts, and other posts where people were saying things among the lines of “I'm confused as to what else people expect when they deliberately click a link to make content publicly available.” Which takes me to…

The "Opportunity" Fallacy

The most disturbing aspect is how parts of the digital marketing community reacted to this event today. Instead of seeing a data privacy disaster, they see a "data opportunity", a fresh source of "intent signals" and "personalization" data.

Shows how we've been conditioned to accept surveillance as inevitable, even beneficial. The response pattern here from folks on Linkedin, treating leaked private conversations as a goldmine for "personalization" rather than a violation reveals something deeply troubling about our relationship with AI and data collection in general.

And also this mirrors the broader trajectory of AI adoption. People are rushing to integrate AI into their most intimate processes like journaling, therapy, creative work, strategic planning while simultaneously being told that maximum data collection is necessary for AI to "serve them better." The more personal and valuable your data, the more essential it supposedly becomes to collect it and enrich it, innit?

And this normalization runs so deep that when private conversations leak, the dominant reaction isn't "this is a violation" but "how can we monetize this insight into human behavior?"

We seem to want these AI systems to be designed not primarily to help users, but to extract maximum behavioral and psychological data from them. And if you think that’s exaggerated, God, this is already happening in so many ways.

It’s a very intimate and quiet surveillance, where AI tools are framed as trusted confidants and creative partners specifically to access our most unguarded thoughts and behaviors. This event today is not a bug but a feature that accidentally became visible, and it showed a lot of folks and companies true colors.

My biggest concern is that we're training ourselves to think surveillance is care, and exploitation is optimization. And to shift a mindset like this might be harder to reverse than any individual privacy breach.

And, as I am wrapping up this newsletter, my dear friend Slobodan Manic messaged me on Slack and said: “remember the chatter last week when Sam Altman said people shouldn’t share their personal lives and use ChatGPT as a therapist, because its not safe…? I guess he knew this was next.” Coincidence ? nah, I’m too old for that shit.

So, my message to you if you’re still reading this is to fight back against the normalization of exposure.

Assume nothing is private. Because, it’s not. When interacting with any AI tool, or any online platform with sharing features, operate under the assumption that anything you type or share could eventually become public.

Make sure you scrutinize every setting. If you must use "share" or "link" features, investigate every privacy setting. Look for terms like "indexing," "public," or "search engines." If it's not crystal clear, do not proceed.

Please limit what you share. Be extremely careful about what personal, confidential, or proprietary information you input into AI tools, especially those connected to the internet. (shouting the offline AI expert Shawn David here)

And lastly, demand true privacy by design. As users and professionals, we must actively push back. Demand clearer, more intuitive privacy controls. Advocate for strong, privacy first defaults. Challenge the systems that profit from your unwitting exposure.

Stay safe out there :)

PS: needless to say, delete your shared ChatGPT conversations like yesterday, if you have any.

Until next time,

x

Juliana

Thanks Mrs J.! I have not shared a chat yet, but have wanted to inorder collaborate and spar with others. I'll definately be that more wary, but still keen to share what I've discovered.

Question, how do we share our prompts/chats with a closed group.?