NLP Tools, AI Snake Oil, and a Checklist You Can Actually Use

Before you buy that “emotion detection” tool, read this.

Hey everyone! just a quick one from me today.

I’ve released something today that I hope saves a few teams from wasting time, budget, or credibility. It’s a practical evaluation checklist for NLP tools, especially the ones that swear by their emotion decoding and predictive analysis. AND, it’s free :)

To measure emotions accurately from text, you need thousands of labeled examples covering a wide spectrum of feelings, tones, and expressions, across languages, cultures, and contexts. That means manually annotating everything from “I’m fine” (which might be neutral or passive-aggressive) to “ugh, of course this happened again” (which could be frustration, sarcasm, or resignation).

Emotion detection through NLP isn’t science fiction, let’s be clear. There are established datasets that label a wide range of emotions across large volumes of text. But these models take serious work: large-scale annotation, validated taxonomies, cultural nuance, and tons of iteration. Even with solid data, emotion is still subjective because annotators often disagree, and context (sarcasm, tone, culture) radically shifts meaning.

So no, it’s not impossible. But it’s not magic either. And most of what’s being marketed as “emotional AI” right now skips all of that complexity in favor of a neat label slapped on behavior like scroll depth, device type, or page exit speed. That’s not emotion detection. That’s inference with a lot of confidence :))

What’s going on with NLP, and why is everyone suddenly selling it?

Natural Language Processing (NLP) is having its mainstream moment. Almost every analytics, CX, and product tool now claims to “understand” unstructured text: reviews, chats, surveys, support tickets. Which sounds powerful. Who wouldn’t want to mine all that qualitative data for real-time insights? (also omg don’t get me started on real-time)

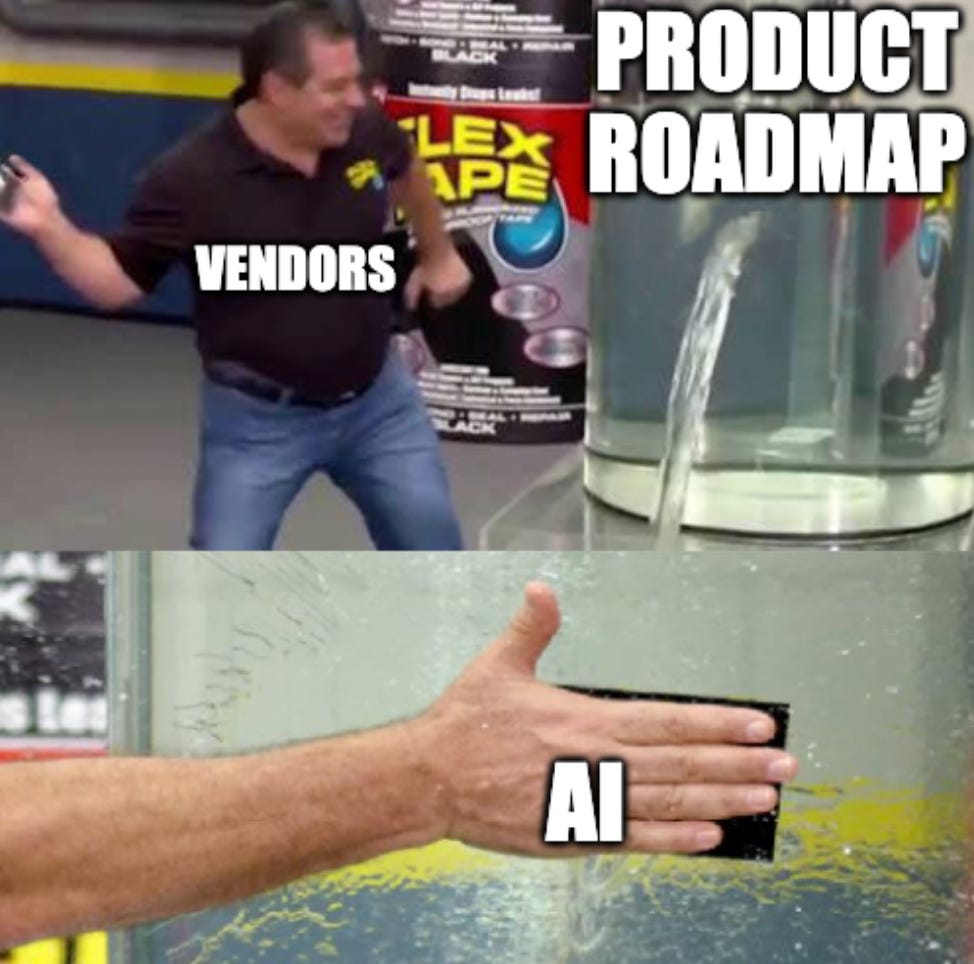

The problem is most of these tools weren’t built for it. They’ve retrofitted LLM wrappers or added pretrained sentiment models to justify a product roadmap pivot. There’s little understanding of what makes unstructured data messy, subjective, and hard to model. Context is flattened. Language is oversimplified. And the output is often reduced to a score, delivered with a straight face and a 95% “accuracy” badge.

Accuracy is a metric so easy to game, especially in sentiment classification. If 80% of your comments are “neutral,” a model that always guesses “neutral” will still report 80% accuracy. But that tells you nothing about nuance, misclassification risk, or edge cases, especially in multilingual, emotionally charged, or culturally specific content.

And yet, accuracy is the first number on the slide.

If a vendor leads with accuracy and doesn’t back it up with per-class F1 scores, confusion matrices, and real-world examples, you should question their entire evaluation process.

I’m not anti-innovation. I just want it done right.

Let me be clear: I want this to work. I want tools to go outside what’s possible and really work and deliver on their promises. As someone that has hands on experience with using AI and NLP and co-building models for the last 3y, I did however see the good, the bad and the ugly that comes with this.

So this isn’t about being a skeptic for sport. It’s about doing better.

In the race to be first, we should be chasing precision, clarity, and transparency, not just market optics. And truthfully, right now, everyone’s slapping “AI” on their product roadmap. Colour me suspicious lol.

I won’t name and shame, but c’mon. Yes, me scrolling twice on a page clearly indicates I’m comparing you to competitors, feel unsafe about your pricing, and my emotional driver is... competition. That’s not AI. That’s astrology mixed with JavaScript.

It’s so messy out there, and what’s worse is these tools often make it into orgs because they look good on paper or promise easy insight. But under the hood you find a lot of probabilistic guesswork, usually unexplainable, often unvalidated, and sold without guardrails.

This is very dangerous. When bad models get baked into customer experience, risk analysis, or brand strategy, the downstream impact is real. Especially in regulated or high-stakes environments, you can’t afford to be led by marketing claims disguised as intelligence.

I’m not here to knock the hustle. There are vendors taking this seriously, grounding their models in real data, transparency, and validation. But they’re still the minority.

As a community, we need boundaries, standards, and shared literacy around what’s real and what’s not. We need to keep educating each other and calling it when the emperor has no… vector embeddings 😂 (I’m keeping this one, sorry not sorry.)

That’s why I put this together this NLP Checklist. It’s a clear, structured checklist of what to ask, what to look for, and where the red flags hide. It’s not meant to cover everything NLP-related of course; AI moves too fast for that. But it’s built on principles that aren’t changing anytime soon:

Garbage in, garbage out.

Explainability > magic.

Precision and recall > “95% accuracy.”

If you can’t audit it, you shouldn’t deploy it.

And critical thinking isn’t optional.

You can use the checklist to evaluate vendors, justify buying decisions, or push back internally when something seems off. It’s free. Take it, adapt it, break it, whatever.

It also contains terms explanations and examples so you can build more confidence on the topic.

You’ll find it here: NLP Tool Evaluation Checklist

If you want to learn more about NLP, I wrote this article 2y ago and the information is still pretty accurate: Introduction to Natural Language Processing (NLP) and its Business Applications

PS: Make sure you let me know if this was useful or if you need help evaluating any tools or your own AI offering ❤️

More soon. Back to work.

x,

Juliana

Nice quick one. "Saved"