AI's Middle Layer: Where the Real Money's Made (or Lost) in the Wrapper Economy

The wrapper economy: Understand the money, risks, and reality of building applications on LLMs.

Most Saturday mornings I show up to the keyboard with only a rough idea of what the newsletter will become, the caffeine does the rest. This week, Myles Younger nudged the direction early by tagging me in a LinkedIn post, asking for a realistic map of AI marketing stacks, one that doesn’t airbrush incumbents out of the picture.

His post lit up a larger question I’ve been circling for months: where did the current explosion of “AI everywhere” really begin, and why does it look so uneven across the “toolscape” we use every day?

In June 2017, the transformer architecture reshaped the trajectory of AI, changing and disrupting the technology and economy of EVERYTHING. It all started from the Google paper: Attention is all you need. Rest is history.

Training costs plummeted, token supply ballooned, and suddenly every product team with a JSON endpoint could put a LLM into its stack.

This resulted in the wrapper economy. A sprawling layer of copilots, workflow agents, orchestration frameworks, and safety rigs that package raw model power into something operators, marketers, and analysts can actually use.

I spent the weekend mapping that wrapper layer, who makes money, who just makes pipes calls to OpenAI, where margins leak, and how regulation might reroute the value chain. If your product, budget, or roadmap touches generative AI, the dynamics behind these wrappers are already shaping your next quarter, whether you see them or not.

So, what you’re about to read next is the outcome of a rabbit hole a lot of caffeine, nicorette, media articles, and sophisticated Deep Research prompts.

NB: It's worth emphasizing that my analysis isn't suggesting the entire AI industry is just made out of "wrappers."

Far from it, there's tremendous innovation happening across the field, from fundamental research to novel applications.

The wrapper economy is simply one fascinating market outcome of transformers and LLMs intersecting with basic human needs: the desire to make powerful technology accessible, usable, and integrated into daily workflows.

A few of my readers asked for a smoother on-ramp, so here’s a TLDR with the core concepts and takeaways of this weeks’ newsletter.

TLDR

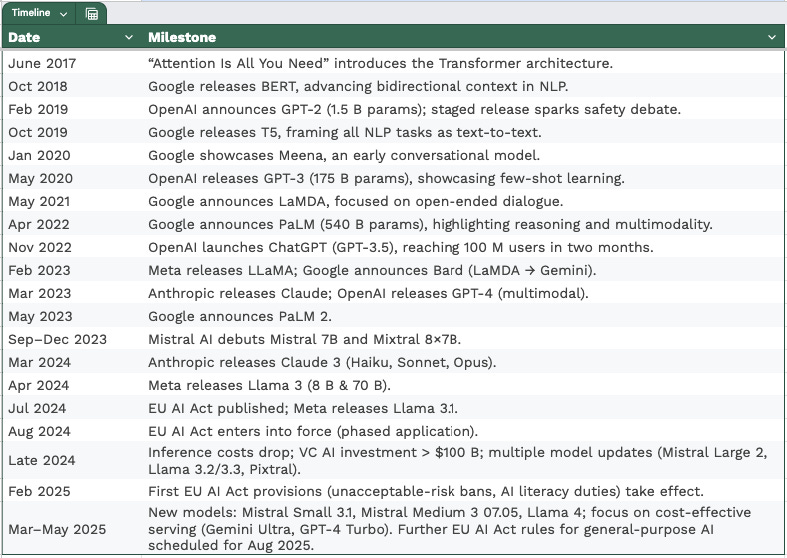

The Development of Large Language Models: Key Events and Dates (2017-present)

Sources for table data:

Google releases BERT (Bidirectional Encoder Representations from Transformers)

OpenAI announces GPT-2

Google releases T5 (Text-to-Text Transfer Transformer)

Google introduces Meena

OpenAI releases GPT-3

Google announces LaMDA (Language Model for Dialogue Applications)

Google announces PaLM (Pathways Language Model)

OpenAI launches ChatGPT

Meta releases LLaMA

Google announces Bard (now Gemini)

Anthropic releases Claude

OpenAI releases GPT-4

Google announces PaLM 2 at Google I/O.

Mistral AI emerges and releases its first open-weight models, including Mistral 7B and the sparse mixture-of-experts model Mixtral 8x7B.

Anthropic releases the Claude 3 family.

Meta releases Llama 3 (8B and 70B parameters).

The EU AI Act

Wrapper

The software layer built on top of a foundation model, adding domain expertise, user experience, governance, or performance tuning so a marketer, lawyer, or analyst can put it to work.Four common Wrapper types:

Vertical agents (Harvey for law, BloombergGPT for finance)

Horizontal copilots (e.g. Microsoft 365 Copilot, GitHub Copilot)

Orchestration frameworks (LangChain, LlamaIndex, Pinecone)

Safety / optimisation rails (Guardrails, PromptLayer)

Economics: Token prices dropped approx. 90% in the last 3y. Wrapper gross margins still hover ~50 %.

Regulatory gravity: The EU AI Act was adopted on 21 May 2024; most high-risk obligations kick in mid-2026- mid-2027.

Platform kill‑zones: November 2023 DevDay (GPT‑4 Turbo) and March 2025 Gemini 1.5 price drop both erased wrapper startups overnight.

Strategic stance: Build data moats, embed deeply in workflows, and route traffic across at least two commercial LLMs plus one self‑hosted model.

With that being said, let’s see where this takes us.

From prototype to platform: Understanding the “Wrapper”

The Transformer architecture completely changed how much it costs to use AI for language understanding. We have seen inference costs fall rapidly over the past few years. Processing costs that once kept these powerful models locked away in research labs have steadily decreased.

In 2022, companies paid around two cents to process a 1K tokens. That cost has dropped with approx. 90% in just three years. This combined with ChatGPT's insane growth to 100 million users within months of launching, created a wave of new tools and frameworks.

NB: Tokens are tiny units of data that come from breaking down bigger chunks of information. AI models process tokens to learn the relationships between them and unlock capabilities including prediction, generation and reasoning. The faster tokens can be processed, the faster models can learn and respond. Source.

Developers and companies quickly began creating ways to turn basic AI outputs into practical tools that could fit into everyday work processes.

The AI market has organized itself around several clear business approaches:

Horizontal copilots like Microsoft 365 Copilot and GitHub Copilot work by adding AI features to software platforms many people already use. These products make money through their wide distribution, helping millions of users with everyday tasks across different applications.

Vertical agents focus deeply on specific fields where specialized knowledge matters most. Harvey serves the legal profession while BloombergGPT concentrates on financial applications. These tools charge premium subscription fees to businesses because they deliver highly specialized assistance for professional workflows.

Orchestration frameworks such as LangChain, LlamaIndex, and Pinecone connect different AI functions together. These systems help development teams build custom AI applications without having to create basic components from scratch. They serve as building blocks for the broader AI application community.

Safety and optimization tools like Guardrails and PromptLayer help organizations follow regulations while keeping costs under control. Financial officers and compliance teams particularly value these solutions as they navigate both budget constraints and regulatory requirements.

Wrapper Economics

Think of the Wrapper Economy as a rapidly expanding ecosystem of companies, products, and services built around powerful Large Language Models like GPT-4, Gemini, and LLaMA.

Wrappers are specialized software layers that transform the raw capabilities of foundational AI models into practical, usable tools for everyday users, from marketers and legal professionals to educators and developers.

From an economy POV, the declining cost of tokens does not automatically translate to higher profit margins for companies building on top of language models aka wrappers.

Wrappers typically maintain gross margins around 50% despite the continuous drops in underlying API pricing.

Sources for the table data: BoredGeekSociety, Andreessen Horowitz, VentureBeat, MostlyMetrics.

The pattern here is that whenever major providers like OpenAI or Google announce price reductions, end customers rapidly expect those savings to pass through to them, often within weeks.

That’s why wrapper companies face margin pressure unless they have established key competitive advantages:

Proprietary data assets that improve model performance beyond what generic APIs can provide

Deep workflow integration that makes their solution difficult to replace or replicate

Specialized domain expertise that allows them to command premium pricing

The economics become further constrained by multiple layers of markup in the AI supply chain:

The "Nvidia tax" - approx. 80% gross margins that Nvidia commands on datacenter GPUs

Hyperscaler markups from cloud providers that host these models

Infrastructure costs for reliability, compliance, and security requirements

Technical infrastructure differences create additional competitive dynamics in the market. Google's TPU architecture reportedly provides Gemini with up to ~80 % lower per-token cost than GPT-4 on Azure (independent tests vary).

And companies building exclusively on OpenAI's technology experience this cost differential directly in their unit economics, placing them at a structural disadvantage relative to competitors with more flexible infrastructure strategies.

These economic realities suggest we are likely to see some of these things happening next:

Market consolidation as smaller players struggle to maintain viable margins

Downward valuation adjustments for companies whose primary offering consists of minimal interface layers

Vertical integration attempts by successful companies seeking to control more of their cost structure

Strategic pivots toward higher-value services less dependent on raw token economics

The sustainable business models will increasingly require differentiation beyond basic API access, focusing instead on unique value creation that justifies premium pricing.

To give some nice balanced examples here, besides the ones I mentioned above we have:

a. Estabilished companies that built a wrapper on top of their core offering and services like Grammarly, Descript AI, Notion AI, etc…

b. Wrapper Start-ups like Perplexity AI, Windsurf, etc.

Quick note here: When I added Windsurf here I started asking myself, are “vibe-coding” tools in essence a wrapper?

Short answer, yes, when a vibe-coding tool like Lovable (which explicitly integrates with LLMs like OpenAI and Anthropic) arbitrates which model to call and adds caching, observability, or fallback logic, it’s a vertical wrapper. That, my friends, is another newsletter on its own.

Platform Kill-Zones: Is the landlord moving in?

The GenAI hype often blinds us to an uncomfortable dynamic: when platform providers decide to enter markets previously nurtured by independent innovators, entire product ecosystems can vanish overnight.

Case in point, OpenAI's DevDay in November 2023.

In a single keynote, the launch of GPT-4 Turbo, with its doubled performance, function-calling capabilities, and a 50% price cut, effectively wiped out startups built on narrower value propositions. Companies specializing in agents, RAG, and third-party plugins saw their competitive advantage evaporate instantly.

The wrapper vulnerability goes beyond direct feature competition. Platform providers exercise considerable influence through various control mechanisms in their ecosystem:

Terms of service modifications that can redefine permissible use cases

API rate limit adjustments that affect operational capacity and reliability

Pricing strategy shifts that impact downstream business models

Usage policy changes that may require significant product redesigns

Also, wrappers exclusively reliant on OpenAI technology operate with an inherent cost structure disadvantage that directly affects their competitive positioning and long-term viability.

AI Regulation Neighbourhood Watch

Governments are sprinting from guidance to enforcement, and consumer‑facing AI wrappers sit squarely in the blast radius. The next 18‑24 months will determine which products clear the compliance bar and which ones implode.

EU AI Act: Labels many LLM apps “high‑risk.” Expected are mandatory transparency labels, training‑data traceability, pre‑launch robustness checks, and fines up to €35 m or 7 % of global revenue.

Privacy regulators already firing – Italy’s 2023 GDPR takedown of ChatGPT proved watchdogs will shut down service first, negotiate later. Any app handling personal data must build consent flows and erasure tooling now.

US: EO 14110 rescinded, but pressure remains – The White House flip‑flop shifts the front line to the FTC, which promises to treat untested or deceptive AI as an “unfair practice.” Audits and bias probes are still on the table.

Sector/local rules stack up – NYC bias‑audit mandate for hiring AI, HIPAA constraints on health bots, EU/UK content‑labelling rules. Each geography adds another compliance checklist.

China: license or leave – Since Aug 2023, generative AI must register, censor content, and log user data to stay live. Western wrappers face near‑zero wiggle room.

(De)centralization, anyone?

The story about decentralization often suggests a future where powerful AI language models are freely available to everyone. But how true is this really?

The promise

Open-source models: Llama 3, Gemma, Mistral 7B, and others have narrowed the gap with mid-tier commercial models. The marketing pitch: "AI for everyone."

The reality

Most AI usage still runs through the usual big tech companies (AWS Bedrock, Azure OpenAI, Google Vertex, Anthropic's platform) so your data ends up in a handful of massive data centers.

At the same time, exclusive deals (OpenAI with Associated Press, Reddit, Financial Times, Stack Exchange) lock the newest and best training data behind legal agreements, while open data collections like LAION struggle to match this quality and freshness.

Training remains tied to scarce, energy-hungry GPU clusters that cost millions to rent; GPU-sharing services help reduce costs somewhat but don't come close to matching the budgets of major players.

The end result is yes, you can run a compressed Llama model on your laptop, but the “cutting edge” remains controlled by a few cloud supercomputers that own both the best data and the massive energy resources.

Net effect:

Using AI models is becoming more decentralized (compressed 7B models on laptops).

Training AI models remains centralized, advantages in data, computing power, and energy are difficult barriers to overcome.

Likely end-state: A two-tier ecosystem?

Local/open tier — widely available models, edge devices, specialized applications, lots of experimentation.

Supercomputer tier — a few companies racing ahead with private data and massive computing clusters.

If the quality gap between these tiers shrinks, decentralization wins naturally.

If the gap widens, the "open" ecosystem will still revolve around a few dominant players, no matter how many GitHub repositories claim to be "open source."

Hype keeps investors coming

In 2024, investors poured an estimated $56 billion into genAI startups and more than $100 billion into AI overall. Dozens of funding rounds sailed past $100 million, and a handful broke the billion-dollar mark, evidence that hype, not hard financials, is steering the wheel.

Early 2025 cranked the frenzy up another notch. SoftBank signaled it might add as much as $40 billion to OpenAI, and Anthropic secured a $3.5 billion round in March. Fear of missing out now shouts louder than proven revenue.

As I said this week on Linkedin, to separate real value from marketing, watch secondary-share prices, GPU spot rates, and revenue-per-token. When venture subsidies dry up, any startup running below 50 percent gross margins without proprietary data or deep workflow lock-in will be first in line for consolidation, or collapse.

Investors clearly believe generative AI will pay off long-term, but the current flood of money looks more like a bubble than solid, fundamentals-driven funding.

Final thoughts (for now)

The AI wrapper economy stands at a fascinating crossroads. In the next five years, things could literally go anywhere from steady co-evolution of players big and small, to consolidation around tech titans, to a decentralized breakthrough, or even regulatory shock that reshapes the entire space. Who even knows anymore?!

What's clear across all scenarios is that we're witnessing a transitional phase.

The wrapper economy represents our collective attempt to harness raw AI potential into practical, everyday tools. It's messy, uneven, and rife with both opportunity and risk.

And I repeat, the most important signals to watch won't be the funding announcements or the marketing hype, but rather the structural indicators:

token economics

GPU spot rates

diversity of successful AI applications across industries.

These metrics will tell us whether we're heading toward a future dominated by a few AI utilities or a rich ecosystem of specialized solutions.

I must admit, this is my most researched and tedious edition I’ve written yet, because I had to cross check multiple sources many times, I had to read different POVs on the same topics and then in the end filtered them through my experience and understanding, so I really hope it was useful to you all.

Leaving the curious folks with some further reading recommendations and sources below.

Until next time, I need to touch grass.

x

Juliana

Sources & Further Reading Recommendations

https://www.bvp.com/atlas/roadmap-ai-infrastructure

https://a16z.com/llmflation-llm-inference-cost/

https://openai.com/index/better-language-models/

https://www.mintz.com/insights-center/viewpoints/2166/2025-03-10-state-funding-market-ai-companies-2024-2025-outlook

https://www.forbes.com/sites/bernardmarr/2023/05/19/a-short-history-of-chatgpt-how-we-got-to-where-we-are-today/

https://medium.com/@ignacio.de.gregorio.noblejas/openai-just-killed-an-entire-market-in-45-minutes-818b2a8ad33e

https://arpu.hedder.com/generative-ai-funding-soars-to-record-56-billion-in-2024/

https://ediscoverytoday.com/2025/01/06/generative-ai-funding-reached-new-heights-in-2024-artificial-intelligence-trends/

https://www.theguardian.com/technology/2023/feb/02/chatgpt-100-million-users-open-ai-fastest-growing-app

https://techcrunch.com/2023/07/18/meta-releases-llama-2-a-more-helpful-set-of-text-generating-models/

https://klu.ai/blog/open-source-llm-models

https://techcrunch.com/2024/02/08/google-goes-all-in-on-gemini-and-launches-20-paid-tier-for-gemini-ultra/

https://techcrunch.com/2025/04/23/here-are-the-19-us-ai-startups-that-have-raised-100m-or-more-in-2025/

https://telanganatoday.com/githubs-annual-revenue-run-rate-hits-2-billion-driven-by-copilot-nadella

https://www.cbinsights.com/research/report/artificial-intelligence-top-startups-2025/

https://venturebeat.com/ai/the-new-ai-calculus-googles-80-cost-edge-vs-openais-ecosystem/

https://www.wheresyoured.at/openai-is-a-systemic-risk-to-the-tech-industry-2/

https://www.reddit.com/r/ChatGPTCoding/comments/17vbp06/big_changes_to_openai_terms_of_service/

https://the-decoder.com/study-shows-evidence-of-degradation-in-chatgpts-performance-since-march/

https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

https://www.nature.com/articles/s41586-024-07566-y

https://www.federalregister.gov/documents/2023/11/01/2023-24283/safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence

Nice piece of writing, again. Good research and sources, thank you. I have a paper on green / renewable stuff including EU regs that you might find interesting, will send.